Building Virtual Robots: Exploring Mechanical Simulation

“SphereBot” is an experiment in robotic simulation by one of our physics engineers, Tom Mirades. While Tom isn’t creating a hardware controller to drive a real-world replica, he is motivated by an adjacent question: “how close to reality can I make something behave?”

What's in a SphereBot?

“SphereBot” is an experiment in robotic simulation by one of our physics engineers, Tom Mirades. While Tom isn’t creating a hardware controller to drive a real-world replica, he is motivated by an adjacent question: “how close to reality can I make something behave?” Tom is a mechanical engineer by training, and brings that perspective when physicalizing and programming 3D entities. He says when building 3D bots, you can “engineer and program like you would in the real-world (and often get a surprise when you hit ‘play’). You learn from those surprises and do some re-engineering. But the best part is, unlike the real-world, you can re-engineer and re-test in minutes instead of hours or days.”

DeepMotion’s articulated physics engine—which allows for modeling of parameters like joints and torque—is designed to make engineering easier and more accurate for those attempting realistic simulation. And, in the future, we hope to move beyond simulation. We believe these experiments show exciting promise for the future of hardware programming. As AI-driven physical simulation matures, we want to allow robotics engineers to use a fast iteration approach like Tom’s to tune hardware in a virtual environment.

Researchers already use simulation environments and AI to build a range of robotic systems—for example, exciting computer vision techniques like sim-to-real transfer are being commercialized for better discernment in real-world robots—but, we still have a long way to go before achieving 1:1 simulation-to-reality fidelity in motion control. We believe bridging this gap between reality and simulation will transform the robotics space.

Tom has shared some of his process below for bringing the SphereBot to life. Join our alpha for DeepMotion Avatar to create your own simulations using articulated physics!

The Process:

To create this demo I used a free 3D asset, Unity, and the DeepMotion Avatar physics SDK.

Physicalizing the Model

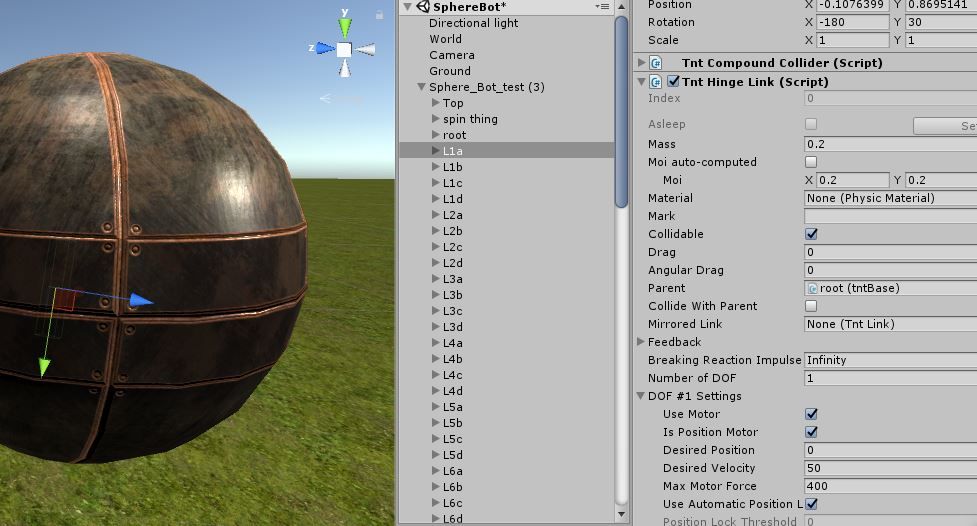

The first step is to physicalize the model, which is pretty straightforward using Unity colliders under the surfaces. I generally try to use just one collider for each moving part. The moving parts are put in a 'gameobject' (which I rename for clarity) along with its associated collider. The collider should be centered in the 'gameobject' for proper weight balance.

Next, I added hinges. The hinges are aligned with the rig hinge points—the SphereBot model was designed in such a way that only hinges are used.

Making the Bot Functional

I then used the Avatar editor to ascribe value to the SphereBot's physical parameters, thereby enabling the joints. These parameters need to be given values before setting key poses or producing procedural animations.

After this, I set the root to 'Kinematic' so it would be anchored in space. With the model anchored in space, I can move limbs by adjusting other parameters.

Setting Key Poses

Now, when I select a joint and put a number in the ‘Desired Position’ field, a limb should move. I went through all the joints and found numbers that would give the bot a standing pose.

I then put those numbers in a script so I could toggle between the ball pose, to a standing pose, and back to ball again. I follow this general process for the more complicated moves—it's an ongoing process based on how intricate you want your procedural motions to be.

Interested in producing your mechanical simulations? Stay tuned for an in-depth tutorial on physics simulation from Tom and start building your own virtual bots with DeepMotion Avatar!