How To Make 3 Point Tracked Full-Body Avatars in VR

Virtual Reality is still in its infancy, with major challenges standing in the way of mass adoption, as well as what many consider the medium’s ultimate value proposition: to give users a sense of presence. A cornerstone issue standing in the way of presence is embodiment.

Virtual Reality is still in its infancy, with major challenges standing in the way of mass adoption, as well as what many consider the medium’s ultimate value proposition: to give users a sense of “presence”, the feeling of being fully transposed and immersed in an interactive, virtual world. A cornerstone issue standing in the way of presence is embodiment. Anyone who has helped run a VR experience will note that one of the first things many people do when entering VR is to look down towards their feet. Rather than looking at the far stretches of their new universe, users seem to have an innate concern with their own physicality.

Simple solutions for user representation include floating hands or floating representations of hand controllers. Larger companies focused on social experiences, like Facebook Spaces or the Oculus Avatar SDK, go a step further with upper-body representation. And some developers take on the arduous task of implementing a full-body IK solution (typically using Final IK or IKinema), usually with blended animations to address strange limb rotations in areas like the elbow, as well as basic lower body movements. DeepMotion Avatar offers unprecedented full body locomotion for VR Avatars using 1-6 points of tracking.

Our physics-based solution uses an inverse dynamic algorithm, rather than inverse kinematics, and natural joint constraints to infer lifelike user movements and to handle collision detection. This short blog is geared towards intermediate developers and will cover how to use our Avatar SDK in your own VR experience or game, focusing on 3 point configuration for content geared at standard home rigs (which typically include a headset and two hand controllers). We will go over implementation for both Unity and Unreal. DeepMotion Avatar is currently in closed alpha, and available to test for early adopters via this application.

What Is Three Point Tracking (3PT)?

Three point tracking (3PT from now on), is controlling a character or avatar in real-time using 3 controller points. In this case the controlled object is the player character, and in general this is highly useful for full body motion reconstruction in virtual reality. VR rigs like the Vive or Oculus Rift with Touch Controllers have 3 tracked objects that the user controls for virtual interaction; the head mounted display and the two controllers are 3 easy points for us to use for 3PT. Typical IK solutions use geometry to snap limbs into certain positions, while we will use physics and ID to fluidly move the Avatar’s limbs.

The DeepMotion Avatar 3PT system will use the position and rotation of these 3 devices as tracking points for the character’s head and hands to reference.

Setting up 3PT for your DeepMotion Avatar Character

The basic process involves taking your DeepMotion Avatar character and assigning 3 objects that the Avatar character controller will use to move the hands and head around.

For Unity

Once the DeepMotion Avatar character is brought into the scene, open up the parent game object, find the root (or simAvatarRoot if you imported an .avt file) game object, open it up, and click on the Humanoid Controller child. On the humanoid controller there is a script called “tntHumanoidController”. In this script you will find the fields for “Head Target”, “L Hand Target”, and “R Hand Target”. Assigned game objects to these fields will have the respective parts of the Avatar character tracking them. The assigned game objects will be the VR game objects if VR-enabled 3PT is desired.

For Unreal Engine

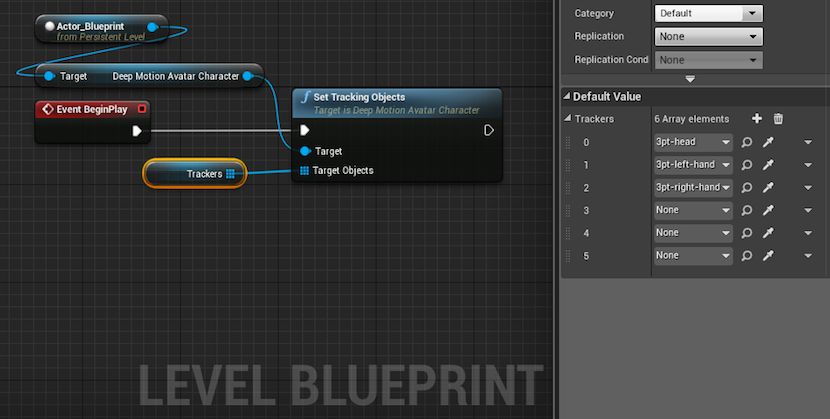

Once the DeepMotion Avatar character is in the level, you will want to create the head and hand game objects prior to opening up the level blueprint. Inside the level blueprint you will want to do two things:

- Create the 3 objects to be used for tracking as empty actors in the level. These will be referenced in the next step.

- Set the head and hand objects to be the trackers in the “Set Tracking Objects” function for the DeepMotion Avatar character.

You can simply create the empty actors in the level and place them wherever, but it is probably preferential to place their initial positions to match the location of each body part they are going to be assigned to.

Step 2 will utilize the “DeepMotion Avatar Character > Set Tracking Objects” function to have the head and hands follow the defined tracking objects. Even though there are only 3 objects being tracked, the input to the “Trackers” variable in the “Set Tracking Objects” function must be a 6 item array of actors. The order for the trackers is head, left hand, and right hand. The last 3 items in the array will be left blank.

Unreal Engine VR

Before setting up your character for VR 3 point tracking, make sure to copy the “defaultInput.ini” file into the “Config” folder in your UE4 project.

The process for setting up 3 point tracking for VR in Unreal is a bit more complex, and to assist in this process we created a set of blueprints for you to use with your character. If you do not wish to use the blueprints provided in the Avatar package, you can check this tutorial for information in setting up your own tracking code.

-

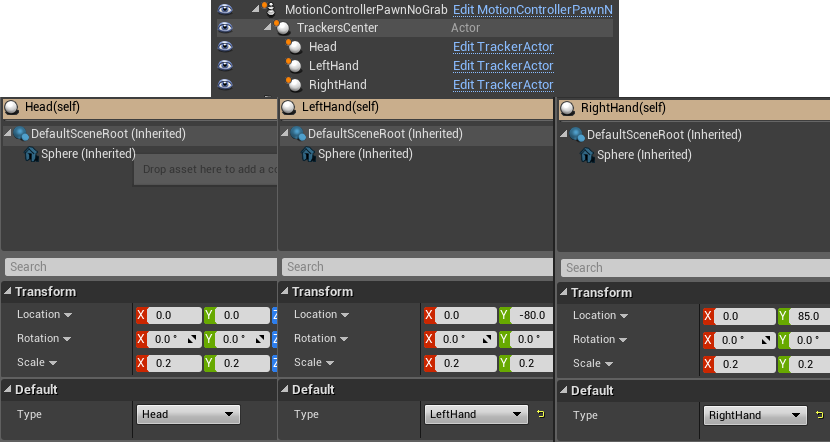

The main blueprint used to create the tracking character is MotionControllerPawnNoGrab. This blueprint has both the character and coding blocks to track the character to the VR device. You will need to add a few things to this blueprint in order to complete it.

-

In the blueprint, find the DeepMotionAvatarCharacter child, and select it. In the DeepMotionAvatarCharacter section, find the Asset field in yellow, and attach the included MidasManVR DeepMotionAvatarAsset.

![]()

![]()

- Next you will need to create an empty actor and parent it to the MotionControllerPawnNoGrab that you placed in the scene earlier. Name it “TrackersCenter”.

- Place 3 TrackerActor blueprints in the scene and attach them to the “TrackersCenter” actor. Name these actors “Head”, “LeftHand”, and “RightHand” respectively, and assign the Type values to match the name.

If you follow these steps the VR tracking set up should work perfectly. If you want to create your own setup from scratch it is recommended to check the MotionControllerPawnNoGrab blueprint for references. Note that the MotionControllerPawnNoGrab also includes movement.

Recommended Kp/Kd Settings for Tracking

This is largely Unity specific, as you don’t really have a way to modify the Kp/Kd for tracking objects in Unreal at the moment.

To modify the Kp/Kd for the trackers, go to the same game object component as the one where you added the trackers. (Character > root/simAvatarRoot > HumanoidController) In the tntHumanoidController component, you will find fields for “Limb Tracking Kp” and “Limb Tracking Kd”.

The Kp is the strength or how quickly the tracked part follows the tracking point as it moves and changes direction. The Kd is the damping of the tracking force, or how strictly the change in velocity of the tracking point is followed. A non-zero value for Kd will allow the tracked part some room to accelerate and decelerate. Think of the values as a representation of a spring. A higher Kp will be like a shorter spring, where the object on the other end closely follows. The Kd would be the elasticity or “springiness” of the spring. A higher value allows for the spring to stretch more as the velocity changes, and a value of zero would result in the spring being completely inelastic.

For Unity

Our recommended Kp for multipont tracking is 2000 for a DeepMotion Avatar character coming from the Web SimRIg Editor pipeline. This value is high enough for time-lag between the motion of the tracking point and the tracked limb to be unnoticed, but low enough so that throwing the tracking point around won’t also throw the character around. A Kd of 100 is recommended for the same reasons mentioned above. To keep the character stable, you want to find a good balance between how elastic the motion feels, without having movement that feels too “floaty”. When tweaking these values, you generally want to have the Kp an order of magnitude higher than the Kd.

For Unreal Engine

Currently, the only way to change the Kp/Kd for the tracking is by modifying the values in the Web SimRig editor, and importing/livesyncing the AVT. The default values of a Kp of 2000 and a Kd of 100 (same as Unity) should work well for DeepMotion Avatar characters brought into Unreal via the web pipeline.

Elevation Changes

The humanoid controller will detect the height of the ground and adjust the walking to account for changes in terrain elevation. This can be useful for VR avatars where the ground has slight elevation changes while the player moves the virtual avatar around. Of course, this is not the end-all solution, since increasing the height of the ground without changing the height of the character will result in the character crouch-walking. This feature should be combined with game code to give smoother avatar walking experiences.

Stairs in Your Environment

In addition to the walking allowing smooth up and down traversal of slopes, the avatar controller also understands stairs. Without changing the height of the tracking points, the avatar’s feet will change the walk cycle to match the changing height of each step.Combine this with smoothly increasing the height of tracking points to match the steps, and you can have a smooth stair climbing walk without any need to make a new walk cycle just for stairs and steps.

DeepMotion is working on core technology to transform traditional animation into intelligent simulation. Through articulated physics and machine learning, we help developers build lifelike, interactive, virtual characters and machinery. Many game industry veterans remember the days when NaturalMotion procedural animation used in Grand Theft Auto was a breakthrough from IK-based animation; we are using deep reinforcement learning to do even more than was possible before. We are creating cost-effective solutions beyond keyframe animation, motion capture, and inverse kinematics to build a next-gen motion intelligence for engineers working in VR, AR, robotics, machine learning, gaming, animation, and film. Interested in the future of interactive virtual actors? Learn more here or sign up for our newsletter.