Machine Learning and the New Forms of Motion Capture for 3D Data

In the early 20th century, transferring real-world human motion to characters was a process done by hand. The hack? A technique made popular by Betty Boop creator Max Fleischer, whereby animators traced magnified live action footage onto transparencies. This early form of rotoscoping was one of the first methods that allowed animators to capture the subtleties and complexity of real life movements in their cartoons.

Motion Capture technology has come a long way from the rotoscoping of the 1920s, and is responsible for a diverse, $120M+ industry of hardware, software, actors, and technical specialists collaborating to digitize movement. Big budget Hollywood films like Avatar, Lord of the Rings, and Planet of the Apes have become emblems of the possibilities of motion capture acting; Andy Serkis’ rendition of the skulking Middle Earth creature, Gollum, proves that with enough talent and financing, motion capture can be an art-worthy vehicle for motion transference.

Motion capture suits and markers are recognizable to the general public as part of high tech filmmaking. In games, it is well known that Motion Capture is also widely used for creating simple motion sequences like walk or run cycles, or serves as a basis for intricate combat, athletic, or gesturing motion.

Motion Capture Costs and Methods

While the general concept of motion capture is known by gamers and entertainment consumers alike, the full complexity and cost of the process isn’t always apparent.

Capture: the cost of motion capture varies greatly on desired quality, location, studio, and talent. Major game and film studios will often have their own motion capture hardware and resources—at a high upfront cost—to ensure creative control and avoid logistical complexity down the line. Otherwise, a rental is in order. You can expect a base rental fee at a very minimum of $1,000 per day. On top of base fees there will be addition fees for equipment rentals, technician and production staff fees, actor fees, director fees, set building fees, and more—each item can cost hundreds to thousands per day as well. All told, one day of filming in a rental studio will put you back $4,000+ per day.

Editing: many don’t realize that capture is only the beginning. Once the raw data is generated in the studio, there are a number of post processing methods for cleaning the data that address common Motion Capture artifacts like jitters and foot gliding. This post processing can cost $10-$20 per second of data, putting the cost of post processing a minute of footage at $600-$1200.

Retargeting: Once a motion clip has been properly edited, the motion needs to be adjusted to fit the 3D model it will be used to animate. This is called retargeting and also can cost $10-$20 per character per second. If you would like to use the same motion for multiple characters (as many do for games or when creating crowd simulations) this cost is multiplied by each character.

Integration: Once a character has been animated with the edited, retargeted motion data, there are a number of additional integration costs depending on the medium. It can be costly to blend the animation into another sequence, or to create transitions for game characters.

There are a few types of Motion Capture, the most widely used being Optical and Inertia. The most common form of motion capture, optical motion capture, uses a series of cameras placed around a room and reflective markers on actors’ joints to reconstruct motion. The cameras detects the reflectivity of the markers at sub-millimeter increments, yielding some of the highest accuracy data. However, optical motion capture requires extensive clean up for occlusions and misidentification or swapping that can occur when markers come too close to one another.

Inertial motion capture uses suits with interior sensors to approximate 3D joint positions, solving issues with occlusion and logistical barriers inherent in having external sensors. This form of motion capture comes with specified hardware for the face, hands, and body. The hardware can be restrictive for actors and the results often suffer from drift, issues with fast motion, and lack global 6DoF positioning. Both methods have strengths and optimal use cases, many of which will not be replaced any time soon.

Pose Estimation and Motion Capture

Professional Motion Capture and integration is an intricate process with numerous stages—there are many pain points that the industry continually innovates to address. Recent advancements in AI—particularly in the field of computer vision—are showing promising results for a new form of capture. Machine learning can address some Capture pain points and broaden use-cases by reducing the reliance on sensors and cameras.

Enter pose estimation, a novel form of markerless motion capture that generates motion from inferring 3D joint position. Using a computer vision model trained on 2D video input and corresponding 3D motion data, the algorithm learns to recognize humanoid movement from the most limited Motion Capture input: a single RGB camera stream.

With enough optimizations and a well trained model, markerless augmented reality on mobile can yield exciting 3D results, even in real-time. At DeepMotion, we’ve found that pairing a robust model with biomechanical character constraints is sufficient for generating some complex motions, persistent motion through occlusion, and even interactive motion. While the quality is not on par with traditional motion capture systems, pose-estimation based motion capture promises an accessible motion data pipeline that can be applied to novel use cases.

Motion data can be extracted from the massive backlog of 2D video that exists online, or from a video shot on a mobile phone from virtually anywhere. This simple form of capture can enable user generated content, motion capture during events, or other scenarios where sensors are impractical, unsafe, or too costly to install (think of an assembly line looking to improve ergonomics where the cost of pausing production, or having wiring on a worker’s body, is a non starter). Pose estimation capture requires a single camera and zero body sensors.

Machine Learning and Data Treatment

Machine Learning and physics-based character modeling have promising applications beyond the act of capture. Retargeting, de-noising, and blending can all be achieved by state-of-the-art techniques that are well on their way to automating the most tedious, and sometimes the most costly, portions of making motion data usable.

With DeepMotion Solutions, a physics-based character filter can give 3D motion natural restraints that serve as automatic filters. By applying motion data to a biomechanically modeled body, data abnormalities are corrected by the limitations of the physically possible. This biomechanical character model also serves as an excellent retargeting tool, making scaling motions to different body sizes more reliable as the physical parameters help guide the resizing process.

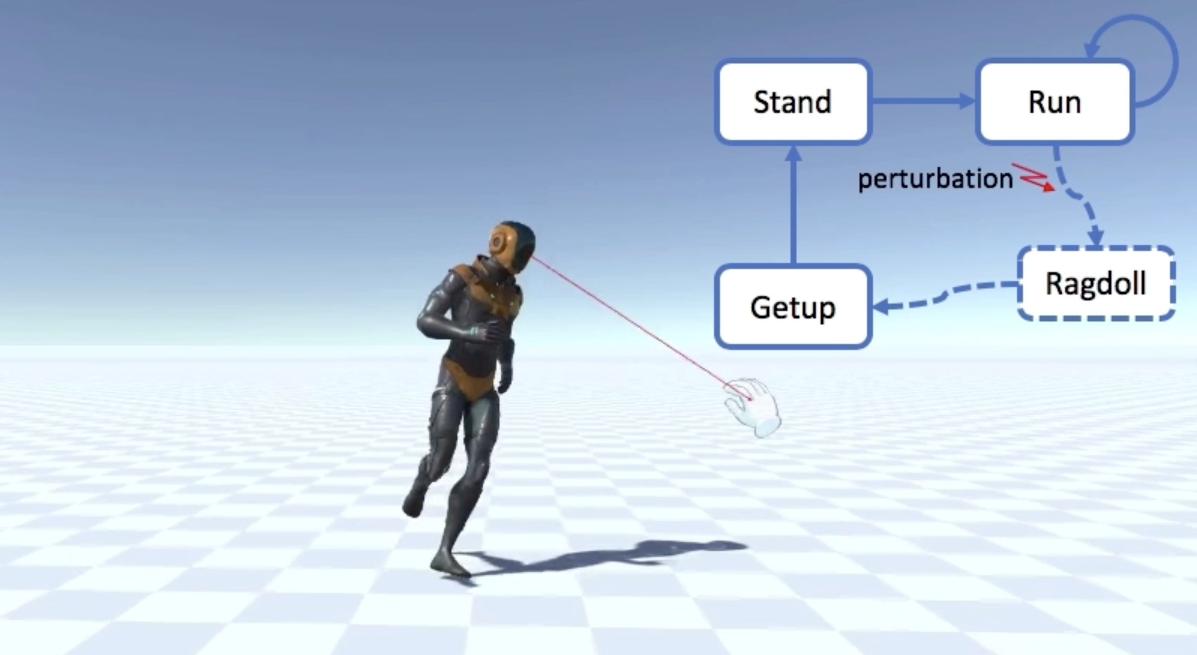

Using physics-based animation and machine learning, motion sequences generated from motion data can also be strung together. Motions are first reverse engineered as physical motor skills that, moment by moment, exercise torque on simulated muscles, just like in the human body. By breaking a motion into fragments, our model can create a broader motion control system that takes optimal motion fragments from two separate motions and blends them together—creating a seamless transition between formerly disparate clips.

Not only does this process automate a tedious blending cycle form integrating motion data, it offers a path to creating endlessly unique animations by running real-time, physics-based simulations.

Synthetic Data and the End to End Motion Pipeline

Traditional motion capture comes at a high cost, which in turn is reflected in the cost and availability of 3D motion data more generally. (Hand crafted motion data, or keyframe animation, also comes with pricey labor costs.) This poses problems for emerging deep learning applications in need of 3D data to train their models—from health and safety motion recognition applications, to pedestrian simulations, to the early research in training robotic hardware on 3D motions using deep learning.

Larger datasets are a key enabler of exciting deep learning innovation. But creating data at scale, which is often referred to as a form of digital oil, is a nontrivial task. The open source 2D image dataset, ImageNet, and new Natural Language Processing datasets have both accelerated machine learning capability in their respective domains. Generating and labeling 3D motion has proven far more difficult due to resourcing costs and requiring a higher magnitude of complexity.

A more accessible form of motion capture can create a new funnel for 3D motion datasets, but this is only the beginning. By leveraging AI motion synthesis, as covered in depth in our Chief Scientist’s basketball motion synthesis paper, and real-time physics simulation, it is now possible to create entirely novel computer generated motion data, or synthetic data.

One unit of motion data, when used to train a character motion skills, can be enumerated in simulation. Because DeepMotion’s form of motion training and character controlled is dynamic and interactive, introducing environmental irregularities, projectiles, or parameterizing the motion on a physical level, emergent and varying motions can be created in simple simulations. These simulations can be generated by the thousands quickly and can label the data based on gamified heuristics. For example, creating data for a fall detection model can be done by running tens of thousands of simulations where motions that result in a character on the ground are labeled accordingly as a “fall”.

While it is still early days for 3D synthetic data as deep learning resource, AI researchers are investing heavily in this area based on successful results in a variety areas. At DeepMotion we see synthetic datasets made from simulation as an essential catalyst for next-gen Motion Intelligence.